Tiered Storage Heirarchy

The ZFS filesystem allows convenient usage of cloned filesystems with CoW (Copy-on-Write) thin provisioning to get the most out of storage; even though multiple devices may have identical images, the individual device's actual on-disk size is only as large as the differences that instance has written to the server since it was provisioned.

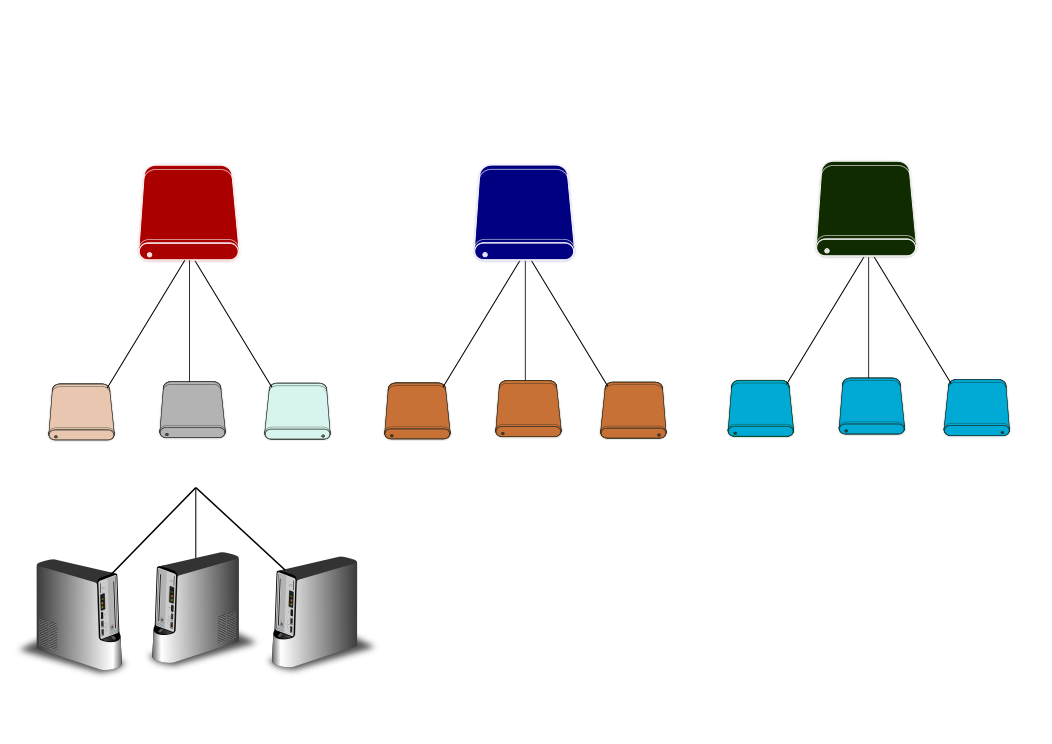

A tiered storage heirarchy is employed by clusterducks:

- Volumes are top-level objects who form the basis for Group images

- Groups are clones of Volumes and take up only the space of their differences from their parent Volume

- Devices are clones of Groups and take up only the space of their temporary storage

- vDisks may be assigned to Devices. Linux devices may use overlayfs to separate local changes from OS image.

Example:

- Volume A is created, initially contains no data with a quota of 40GB (0B on-disk)

- Group A-1 is created, initially contains no data (0B on-disk)

- Device 1's OS is installed into temporary device image (~10GB)

- Group A-1 is updated using Device 1, now contains OS installation (10GB)

- During update, Device 1 is reprovisioned from the newly created Group A-1 snapshot (0B on-disk)

- Group A-1 is promoted to Volume A

- Volume A now contains Windows 10 installation (10GB on-disk)

- Group A-1 is empty (0B on-disk)

- More groups can be created as clones of Volume A:

- Group A-1 contains contents of Volume A (only OS installation)

- 0B on-disk (references 10GB)

- Group A-2 contains contents of Volume A plus "CAD Software 2016 (new testing version)"

- 5GB on-disk (references 15GB)

- Group A-3 contains contents of Volume A plus "CAD Software 2015 (production version)"

- Users will be migrated to Group A-2 when testing is complete

- 5GB on-disk (references 15GB)

- Group A-1 contains contents of Volume A (only OS installation)

Because of the three-layer tier, similar images may share a common core and consume only 20GB. With independent images (no linked clones) the above configuration would consume 45GB. Larger networks will see more efficiency.

Tiered storage heirarchy could be considered poor man's deduplication (because of the absurdly high bandwidth and memory requirements for actual inline deduplication hash table calculation & storage).

With the device operating system configured to redirect data to separate, persistent storage, the device image can be reprovisioned every time it starts up, keeping the images neat and tidy.